We are counting down to the 2018 edition of the CWTS Leiden Ranking, to be released on May 16. Producing a new edition of the ranking is a major task. As always, we are putting a lot of effort into careful data cleaning and accurate calculation of bibliometric indicators. This ensures that the comparisons facilitated by the ranking rely on the best possible basis.

Access to the Leiden Ranking is provided through a website. We are continually working on improving this site. In recent years, we have also become increasingly interested in understanding more systematically how visitors to the site use the ranking. Based on our findings (detailed in a scientific paper), here are the highlights of our analysis of visits to the website of last year’s edition of the ranking.

Peak of attention

We calculated the average daily number of visits to the Leiden Ranking website for each month from May 2017 to February 2018. In a typical month, there are about 200 visits per day. However, the number of visits shows a marked peak in May 2017, the month in which the 2017 edition of the Leiden Ranking was released. In this month, the website was visited nearly 2000 times per day.

From far and wide

Visitors of the Leiden Ranking website originate from many different countries worldwide, although there is a bias toward European countries. The large number of visitors from Australia, Turkey, Iran, and South Korea is quite remarkable. For each of these countries, the number of visitors is larger than half the number of visitors from the US, and each of these countries contributes more visitors than China.

Marked preference for ‘league tables’

The motto of the Leiden Ranking is Moving beyond just ranking. Performance of organizations as complex as universities must be understood from a multidimensional perspective, as set out in our principles for responsible use of university rankings. Ranking universities according to one single criterion is not a good idea. To emphasize the importance of a multidimensional perspective, the Leiden Ranking website offers three views: the list view, the chart view, and the map view.

Each view has its strengths, and foregrounds a specific way of comparing universities. The list view is closest to the traditional idea of a university ranking as a one-dimensional list, a ‘league table’, supposedly with the ‘best’ at the top. However, unlike many league tables, the list view always presents multiple indicators and enables the user to choose the indicator based on which universities are ranked. The chart view shows the performance of universities according to a combination of two indicators, focusing on the actual values of the indicators rather than the ranks implied by these values. The map view offers a geographical perspective on universities, enabling comparisons of universities in the same part of the world.

Visitors of the Leiden Ranking website turn out to be mostly interested in the traditional list view. This view is used in more than 90% of all visits to the website, while each of the other two views is used only in about 10% of the visits. (Note that multiple views can be used in a single visit to the website.) Hence, despite our efforts to offer alternative perspectives, visitors tend to consult the Leiden Ranking mainly from a league table point of view.

Most popular indicators

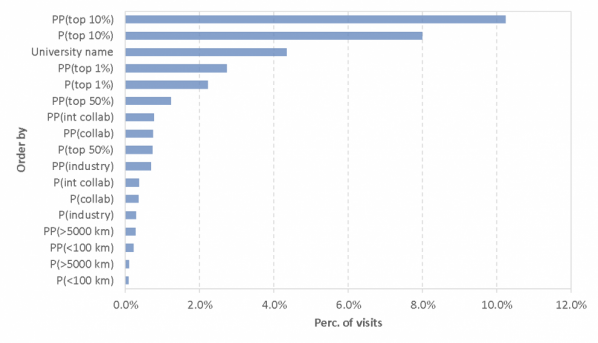

In the list view, universities are ranked by default by their number of publications, represented by the P indicator. This indicator is presented together with two other indicators: the P(top 10%) indicator and the PP(top 10%) indicator. These indicators show, respectively, the absolute number of highly cited publications of a university and a university’s percentage of highly cited publications. According to the philosophy of the Leiden Ranking, it is imperative that users of the ranking choose the indicators most relevant for their purpose. Users can then rank universities based on these indicators.

Below we show the indicators most often used to rank universities. The P indicator is the default choice and is therefore the starting point of all visits to the list view. For this reason, the P indicator is not included in the statistics presented below. Remarkably, each of the other indicators is used only in a small share of all visits. The PP(top 10%) indicator is used most often, but even this indicator is used only in slightly more than 10% of the visits. The P(top 10%) indicator is used in 8% of the visits, while in about 4% of the visits, universities are ranked alphabetically based on their name. All other indicators put account for no more than 3% of all visits to the list view. Overall, there is a preference for impact indicators over collaboration indicators. Likewise, size-independent indicators, such as PP(top 10%), tend to be preferred over their size-dependent counterparts, such as P(top 10%).

Use follows presentation

In an experiment carried out during one week in March 2018, we changed the list view to a new default: universities were ordered alphabetically instead of by their publication output. As can be seen below, in more than 25% of all visits to the list view, the visitor decided to switch ‘back’ to the ranking by publication output, returning to the traditional default presentation of the list view. Interestingly, however, this experiment led to a more varied use of the Leiden Ranking. When alphabetical order was the default, the PP(top 10%) and P(top 10%) indicators were used more than twice as often. For instance, the PP(top 10%) indicators was used in about 22% of all visits, while normally it is used only in somewhat more than 10% of the visits. This demonstrates that the default choices in the presentation of the Leiden Ranking shape how the ranking is used.

Lessons learned

The above analysis does not only offer various interesting insights, but it also raises many new questions: Why do some countries show much more interest in the Leiden Ranking than others? Why are visitors of the Leiden Ranking website so strongly attracted to the league table perspective offered by the list view? Why do users of the list view tend to stick to the default indicator for ranking universities, and which indicator would they actually prefer to be the default choice? We invite you, as users of the Leiden Ranking, to share your perspective on these questions. Please let us know your opinion by commenting on this blog post or by contacting us by e-mail.

Thanks to Anne Beaulieu for her extensive help in improving this blog post.